| Issue |

Int. J. Simul. Multidisci. Des. Optim.

Volume 8, 2017

|

|

|---|---|---|

| Article Number | A13 | |

| Number of page(s) | 8 | |

| DOI | https://doi.org/10.1051/smdo/2017007 | |

| Published online | 04 December 2017 | |

Research Article

Robust design optimization using the price of robustness, robust least squares and regularization methods

Department of Mechanical Engineering, National University of Sciences & Technology,

Karachi, Pakistan

* e-mail: hbukhari@alum.mit.edu

Received:

24

April

2017

Accepted:

13

October

2017

In this paper a framework for robust optimization of mechanical design problems and process systems that have parametric uncertainty is presented using three different approaches. Robust optimization problems are formulated so that the optimal solution is robust which means it is minimally sensitive to any perturbations in parameters. The first method uses the price of robustness approach which assumes the uncertain parameters to be symmetric and bounded. The robustness for the design can be controlled by limiting the parameters that can perturb.The second method uses the robust least squares method to determine the optimal parameters when data itself is subjected to perturbations instead of the parameters. The last method manages uncertainty by restricting the perturbation on parameters to improve sensitivity similar to Tikhonov regularization. The methods are implemented on two sets of problems; one linear and the other non-linear. This methodology will be compared with a prior method using multiple Monte Carlo simulation runs which shows that the approach being presented in this paper results in better performance.

Key words: robust design / optimization / price of robustness / control of robustness / robust least square

© H.J. Bukhari, published by EDP Sciences, 2017

This is an Open Access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This is an Open Access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

1 Introduction

Robust design optimization methods refers to a class of methods that can accommodate uncertainty while maintaining feasibility. The uncertainty in data is accommodated by accepting suboptimal solutions to a nominal design optimization problem. This methodology ensures that the design solution remains feasible and close to optimal even if there is a change in data. Different methodologies for robust design and optimization in mechanical systems have been extensively discussed in literature [1–14]. Most of these methods assume some kind of distribution for the uncertain variable to optimize the problem.

Most systems in practice are designed deterministically so that they are optimal with respect to certain variables that are assumed to operate strictly under designed conditions. Normal optimization routines either maximize or minimize an objective function given some constraints placed on the problem so that the system is optimal only when the design conditions are met completely. But design variables are inherently stochastic and the environment that they are designed to operate in is uncertain. The uncertainties in the operating environment are introduced from a variety of sources including manufacturing errors and incomplete information about the nature of the problem.

Therefore it is prudent to optimize design based on uncertainties that might be encountered by the system. Robust designs are able to meet design objectives while taking into account aberrations that might affect the system. Such designs are generally suboptimal with respect to the deterministic design but are robust under design uncertainties. The fundamental principle of robust design is to improve product quality or stabilize performances by minimizing the effects of variations without eliminating their causes [15]. Usually sensitivity analysis is done after the optimization procedure to ascertain the affect of change of one or more variables on the objective function. On the contrary robust design methodology accommodate uncertainty during the design process itself by making sure that the design remains feasible when subject to variation. Hence, reducing the possibility of large scale design changes after the design has been fielded.

2 Robust optimization framework

2.1 Linear robust optimization

Most robust design methods only take implementation errors into account by assuming a certain distribution for design variables but ignore parametric uncertainties. Implementation errors generally arise because of fabrication errors while parametric uncertainties are caused by noises in the system that have not been accounted for or missing variables that were not considered in the design. In actual environments parameters are subject to uncertainty as well. Robust solutions guarantee near optimal performance even if the optimum design is not realized. This means that a system designed using robust optimization will perform better than a deterministically designed system under non-ideal environment.

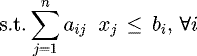

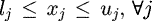

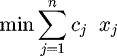

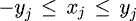

Consider the following linear optimization problem:

(1)

(1)

Assume that only the parameter values of matrix A are uncertain i.e., aij are uncertain parameters belonging to a known uncertainty set.

Reference [16] considers column wise uncertainty in matrix A and gives a robust formulation of problem (1) as follows

(2)

(2)

Robust optimization can be thought of as a worst-case minimization algorithm. The optimal decision is to protect against worst case scenarios by taking values from within the parameter bound set χ.

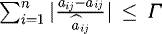

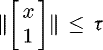

Reference [17] gives an alternative formulation of robustness for problem (1) by introducing a concept of price of robustness where assuming the uncertain parametersãij to be symmetric and bounded variables and j ∈ Ji be the set of coefficients subject to parameter uncertainty that assume values in the interval  . They introduce a parameter Γi, not necessarily an integer, that takes values in the interval

. They introduce a parameter Γi, not necessarily an integer, that takes values in the interval  . The role of Γi is to adjust the robustness of the model against the level of conservatism of the solution. The budget of uncertaintyputs a limit on the number of constraints that can deviate from their mean

. The role of Γi is to adjust the robustness of the model against the level of conservatism of the solution. The budget of uncertaintyputs a limit on the number of constraints that can deviate from their mean  :

:  , Γ = 0 means that

, Γ = 0 means that  and return to the nominal problem (1). On the other hand if Γ = ∣Ji∣, this makes the constraint redundant where

and return to the nominal problem (1). On the other hand if Γ = ∣Ji∣, this makes the constraint redundant where  ∀i which makes the constraint very conservative (Soyster’s approach). And if Γ ∈ [0, n] we can adjust the degree of robustness of the problem and hence put a limit on the number of parameters that can be at their worst-case values simultaneously. The objective is to allow change of only up to

∀i which makes the constraint very conservative (Soyster’s approach). And if Γ ∈ [0, n] we can adjust the degree of robustness of the problem and hence put a limit on the number of parameters that can be at their worst-case values simultaneously. The objective is to allow change of only up to  coefficients so that one coefficient ait changes by

coefficients so that one coefficient ait changes by  .

.

(3)

(3)

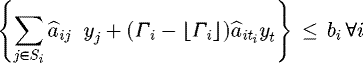

Reference [17] also provide an alternative formulation (4). So the classical approach of (2) can be transformed into

(4)

(4)

2.1.1 Example: Robust Air Cleaner Design

Let us apply this formulation to a practical problem illustrated below.

This problem is about a tractor manufacturing firm which wants to produce air cleaners for their tractors [18]. The schematic for the design is shown in Figure 1. The objective is to produce as many air cleaners per month as possible. The variables are the diameters of the exhaust duct x1 and the main body x2. The area of metal required by each air cleaner is given by

The dimensional constraints for the design are:

Another requirement is that the area of each cleaner should be at least 250 square inches; thus,

The number of air cleaners to be produced is constrained by the amount of sheet metal that is available (15,000 square inches). The constraint on production level is that at least 50 cleaners need to be produced. Therefore,

Based on the robust formulation of equation (4), a robust linear problem that takes into account the uncertainty in the length of the exit duct (Γ1 = 1) within 10% is as follows:

(5)

(5)

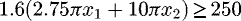

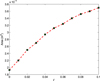

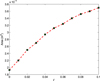

The solution of the above robust program gives an optimal value of 252.31, which is slightly worse off than the deterministic optimal of 250.24. A similar robust linear program can be written for inclusion of uncertainty in the length of the main body. The plots for this analysis are provided in Figure 2.

When Γ = 2, we return to Soyster’s formulation (2) which gives the following

(6)

(6)

|

Fig. 1 Air cleaner. |

|

Fig. 2 Objective function variation as a function of Γ. |

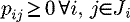

2.1.2 Control of robustness

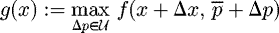

As was discussed in Section 2.1 that the robust formulation discussed could guarantee a deterministic feasible solution to the robust problem only if Γ ∈ [0, |Ji|]. If more than Γ parameters change than robust formulation is not guaranteed to satisfy all the constraints but it can be shown that actually the optimization problem remains feasible with a high probability level. Specifically, Bertsimas & Sim have shown that even if more than Γ parameters vary, the probability that ith constraint will be violated is given by:

(7)

where exp is the exponential function and

(7)

where exp is the exponential function and  .

.

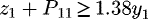

Using the above approximation, the probability of constraint violation for our problem can be calculated. This gives the decision maker a tradeoff between robustness and cost. It can be seen in Figure 3 that the probability of constraint violation decreases as the degree of conservatism increases.

|

Fig. 3 Trade-off between robustness and conservatism. |

2.2 Non-linear robust optimization

According to [19] There are two forms of perturbations: (1) Implementation errors, which are caused in an imperfect realization of the desired decision variables x, such as those that may occur during the fabrication process; and (2) parameter uncertainties, which are due to modeling errors during the problem definition, such as noise. Most robust optimization formulations, don’t take parameter uncertainties into consideration [1].

Let  be the nominal objective of a design problem. The nominal optimization problem ignores uncertainties. Due to factors that are beyond the designer’s control such as manufacturing errors and environmental noises, there can be a lot of uncertainties associated with parameter pp ∈ Rm. Assuming

be the nominal objective of a design problem. The nominal optimization problem ignores uncertainties. Due to factors that are beyond the designer’s control such as manufacturing errors and environmental noises, there can be a lot of uncertainties associated with parameter pp ∈ Rm. Assuming  represents an estimation of the true problem coefficientp. Hence,

represents an estimation of the true problem coefficientp. Hence,  +Δp can be used instead of p where Δp are the parameter uncertainties. Along with the presence of implementation errors

+Δp can be used instead of p where Δp are the parameter uncertainties. Along with the presence of implementation errors  we formulate the robust problem instead of the nominal optimization problem as follows

we formulate the robust problem instead of the nominal optimization problem as follows

we try to find a design x that minimizes the worst-case cost.

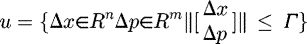

where △x, Δp is assumed to lie within the uncertainty set u defined as follows

(8)

where Γ > 0 is the size of perturbation by which the design needs to protected and ||∙||2 is the l2 norm.

(8)

where Γ > 0 is the size of perturbation by which the design needs to protected and ||∙||2 is the l2 norm.

For simplicity let’s define

Having defined the ellipsoidal uncertainty set and the worst-case cost of the objective function, the robust optimization problem which minimizes the worst case cost is given as follows

For a constrained problem with ellipsoidal parametric uncertainties such that Γ = 1, the robust formulation would be as follows

(9)

(9)

For polyhedral uncertainty set, u is defined as

(10)

(10)

In order to be less restrictive, let’s define a box of uncertainty  with

with  most likely value:

most likely value:  and

and  . Similarly define

. Similarly define  . Since we are primarily interested in the analysis of parametric data uncertainties, we restrict the ellipsoidal uncertainty set to the parameter uncertainties which are due to modeling errors such as noise in data, which in this case means coefficients of variables. This type of robust optimization formulation requires only modest assumptions about distributions, such as mean and bounded support [20]. Hence making the need for knowledge of underlying probability distributions of stochastic variables unnecessary.

. Since we are primarily interested in the analysis of parametric data uncertainties, we restrict the ellipsoidal uncertainty set to the parameter uncertainties which are due to modeling errors such as noise in data, which in this case means coefficients of variables. This type of robust optimization formulation requires only modest assumptions about distributions, such as mean and bounded support [20]. Hence making the need for knowledge of underlying probability distributions of stochastic variables unnecessary.

(11)

(11)

For linear constraints of the form a′x + b ≤ 0, robustness can be introduced by incorporating robustness level Γ into the constraint as follows

(12)

(12)

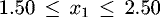

2.2.1 Example: Robust design of a cantilever beam

The working of the method is demonstrated through an engineering design example from [4]. A cantilever bean in Figure 4 is designed against yielding due to bending stress while the cross-sectional area is desired to be kept as minimum. Five random variables are considered, including two design variables: x = [x1 , x2]T = [b, h]T and three design parameters p = [p1, p2, p3]T = [R, Q, L]T. b and h are the dimensions of the cross-section, L is the length of the beam. Q is the external force and R is the allowable stress of the beam. The objective is to minimize the cross-sectional area of the beam while maximizing its tensile strength. For feasibility the ratio of h/b should also be less than 2. Since the The distributions for the design and parameters are given in Table 1.

The mathematical model is expressed as follows:

(13)

(13)

In this case the parametric uncertainties mean uncertainties in noisy data which manifest themselves in the form of coefficient uncertainties of variables. The robust optimization formulation of the above deterministic problem based on the methodology described above is as follows:

(14)

(14)

Here Δp represents the parametric uncertainties and Δxrepresents the implementation errors.

The result of the optimization are given in Figure 5. Maximum uncertainty was constrained at 10% of the nominal values of the parameters which is the value of standard deviation form the parameters. The flexibility provided by Γ is that it can adjust the design solution based on the level of robustness desired by the designer. When Γ = 0, the robust formulation returns the conventional deterministic solution and when the uncertainty in the design variables and parameters is restricted to 1% then the optimization returns an area that is bigger, as expected, to accommodate all the perturbations in the design space. Compared to other methods discussed in [4], although this method is more conservative but it doesn't have probability of constraint violation as it will always satisfy the strength constraint with a probability of 1.

|

Fig. 4 Cantilever beam. |

Distribution of random variables.

|

Fig. 5 Solution of the beam example as a function of Γ. |

3 Robust response surface with uncertain data

Response Surface Methodology (RSM) is an important meta-modeling technique for finding a low order representation of a non trivial process involving multiple design variables. Since the exact relationship between variables and the response is unknown, therefore RSM is used to determine the coefficients for a linear low order polynomial model representation of the response function. A first order response surface model can be represented by the following

Since a first order function cannot accommodate curvature in the system, and a third and higher order systems become too complicated, therefore in most applications the model is restricted to a second order polynomial as follows [21].

For a 2 variable system, this can be simplified to

(15)

(15)

Most of the time we have an over determined system with a set of equations Xβ = Y , where we know the data matrices  ,

,  . These type of systems are usually solved by minimizing the square of the residuals obtained by the difference between the actual values and the values obtained from the fitted model. This method is known as the method of Least Squares. In the context of our model

. These type of systems are usually solved by minimizing the square of the residuals obtained by the difference between the actual values and the values obtained from the fitted model. This method is known as the method of Least Squares. In the context of our model

The objective is to minimize the squared error between the fitted model and the actual value by using the residual as the objective function

(16)

(16)

There is an analytical closed form for determining the coefficients β using the method of least squares which is

(17)

(17)

Assuming we are facing the same type of uncertainties in the data as we assumed in the previous sections which might be because of manufacturing errors or implementation errors and that can perturb either the elements of matrix X or Y or both in which case the β’s are going to become suboptimal. We are going to assume that the coefficients of matrices X and Y are unknown but bounded as before.

We are going to solve this problem using two methods; Robust Least Squares method and Regularization Method.

3.1 Robust Least Square (RLS) method

We employ the method of Robust Least Squares (RLS) [22] to determine the optimal β’s in this case. RLS method assumes that data matrices are subject to deterministic perturbations such that instead of pairs (X, Y ) we have perturbed pair (X + △ X, Y + △ Y ), where △v = [△ X △ Y ] is an unknown but bounded matrix with, where ρ ≥ 0 is provided. So we minimize the following residual instead of the one in equation (16).

where ρ ≥ 0 is provided. So we minimize the following residual instead of the one in equation (16).

(18)

(18)

It can be seen that for ρ = 0, we recover the original least squares problem.

When ρ = 1, the worst-case residual is given by

(19)

(19)

A unique solution xRLS fen by

(20)

where X! denotes the Moore-Penrose pseudo inverse of X and λ and τ are the unique optimal points for the following problem.

(20)

where X! denotes the Moore-Penrose pseudo inverse of X and λ and τ are the unique optimal points for the following problem.

(21)

(21)

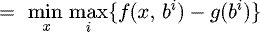

For comparison purposes, the results of this method will be compared with the one proposed by [23] which is based on minimizing the maximum deviation by building a confidence interval B around the coefficient estimates (β). It is assumed that the true β is some element b of B. The method optimizes the following problem

(22)

where b1, b2, …,bM are the extreme points of B and and

(22)

where b1, b2, …,bM are the extreme points of B and and

3.1.1 Example: Process robustness under uncertain data

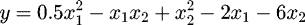

To illustrate the working of this method, an example application of robust manufacturing is taken from [22]. In semiconductor manufacturing we would like the oxide thickness on a wafer to be as close as possible to the target mean thickness. Suppose the semiconductor manufacturing plant has two controllable variables x1 and x2. Table 2 shows experiment that was performed. The objective is to find operating conditions that give a minimum mean response, while making the variability of the response as small as possible. In the problem, the true function relating performance response y and design variables x1 and x2 has been given by the quadratic function

(23)

(23)

with the constraints as follows

(24)

(24)

The optimal solution to this deterministic problem is (2.8, 3.2) for an optimal value of y = −19.6. It is then supposed that the objective function is not known. A design of experiment study is carried out to estimate the objective function using a 23 orthogonal array experiment with x1,2 = −1, 0, 1 as shown in Table 2. An error term ε ~ N (0, 1) is added to the objective function equation to introduce random errors. Monte Carlo simulation is used to analyze the performance of different methodologies compared to the RLS method discussed in the previous section.

The Monte Carlo simulation is performed 100 times and the canonical method of least squares is used to estimate the response surface and subsequent optimal values for the problem. The robust optimization method of Xu & Albin is also used to calculate the robust optimal values, the values of which are given in their paper. They are compared with the RLS method given in the previous section for analysis.

Table 3 indicates that the RLS approach achieves better results for mean performance compared to the robust optimization model of [22] for this test problem using 100 Monte Carlo simulation runs. It turns out that the robust approach of Xu & Albin is slightly more conservative than the one given in this paper. Although this result cannot be generalized for high dimensional equations for ρ > 1 where the poly tope B can turn out to be less conservative but more computationally expensive than the RLS approach.

Design of experiments to study the process robustness.

Comparison of optimal results using different approaches.

3.2 Regularization method

Regularization methods provide an alternate methodology for managing uncertainty in data matrices X and Y particularly if these matrices are sensitive to errors or are ill conditioned [23]. In order to restrict the perturbation on β to reduce sensitivity, these methods place a bound on the β vector so that we minimize where Ω is some suitable norm. This method is similar to the classical Tikhonov regularization method when

where Ω is some suitable norm. This method is similar to the classical Tikhonov regularization method when  where μ > 0 is a regularization parameter that improves the conditioning of the problem thus making it more suitable for a solution. Solution β is found by solving the following problem

where μ > 0 is a regularization parameter that improves the conditioning of the problem thus making it more suitable for a solution. Solution β is found by solving the following problem

(25)

(25)

If we assume that the values of elements in matrices X and Y are not the nominal values on which perturbation occurs in the form of ΔX and ΔY respectively i.e., X and Y themselves comprise of data measures that have measurement errors. In this case it’s going to be hard to estimate a bound ρ on ΔX and ΔY. Reference [23] proposes a Total Least Squares (TLS) methodology for these problems in which case μ = −σ2 where σ is the smallest singular value of  . Hence

. Hence  .

.

When we apply TLS to the example problem in Section 3.1.1, we get the results as shown in Table 4.

TLS method gives worst values than all the methods considered before but it is to be expected since it does not consider any bound on the perturbation of data.

Optimal result using TLS approach.

4 Conclusions

Three new methods for robust optimization methodologies for linear and non-linear problems were discussed and implemented on practical problems. The methods provide advantages associated with including uncertainty in the model as a safeguard against implementation errors and parametric uncertainties. Uncertainties in response surface models were also considered and simulations show significant improvement of the objective and decrease in variability of the response using the proposed method. The results point to a viable methods that can be used for the robust optimization of mechanical and process design problems under restricted but reasonable perturbations.

Acknowledgments

The author would like to thank his advisor Prof. Daniel D. Frey for his help with this paper and for reviewing the draft.

References

- S. Sundaresan, K. Ishii, D.R. Houser, A robust optimization procedure with variations on design variables and constraints, Eng. Optim. 24, 101–117 (1995) [CrossRef] [Google Scholar]

- A. Parkinson, C. Sorensen, N. Pourhassan, A general approach for robust optimal design, J. Mech. Des. 115, 74–80 (1993) [CrossRef] [Google Scholar]

- K.H. Lee, G.J. Park, Robust optimization considering tolerances of design variables, Comput. Struct. 79, 77–86 (2001) [CrossRef] [Google Scholar]

- X. Du, W. Chen, Towards a better understanding of modeling feasibility robustness in engineering design, J. Mech. Des. 122, 385–394 (2000) [CrossRef] [Google Scholar]

- D. Ullman, Robust decision-making for engineering design, J. Eng. Des. 12, 3–13 (2001) [CrossRef] [Google Scholar]

- A. Zakarian, J.W. Knight, L. Baghdasaryan, Modelling and analysis of system robustness., J. Eng. Des. 18, 243–263 (2007) [CrossRef] [Google Scholar]

- J. Olvander, Robustness considerations in multi-objective optimal design, J. Mech. Des 16, 511–523 (2005) [Google Scholar]

- J.S. Kang, M.H. Suh, Robust economic optimization of process design under uncertainty, Eng. Optim. 36, 51–75 (2004) [CrossRef] [Google Scholar]

- J.S. Kang, T.Y. Lee, D.Y. Lee, Robust optimization for engineering design, Eng. Optim. 44, 175–194 (2012) [CrossRef] [Google Scholar]

- L. Wang, X. Wang, X. Yong, Hybrid reliability analysis of structures with multi-source uncertainties, Acta Mech. 225, 413–430 (2014) [CrossRef] [Google Scholar]

- L. Wang, X. Wang, R. Wang, X. Chen, Reliability-based design optimization under mixture of random, interval and convex uncertainties, Arch. Appl. Mech. 86, 1341–1367 (2016) [CrossRef] [Google Scholar]

- L. Wang, X. Wang , H. Su, G. Lin, Reliability estimation of fatigue crack growth prediction via limited measured data, Int. J. Mech. Sci. 121, 44–57 (2017) [CrossRef] [Google Scholar]

- W. Chen, M.M. Wiecek, Compromise programming approach to robust design, J. Mech. Des. 121, 179–188 (1999) [CrossRef] [Google Scholar]

- X. Lu, X. Li, Perturbation theory based robust design under model uncertainty, J. Mech. Des. 131, 111006 (2009) [CrossRef] [Google Scholar]

- B. Huang, X. Du, Analytical robustness assessment for robust design, Struct. Multidiscip. Optim. 34, 123–137 (2007) [CrossRef] [Google Scholar]

- A.L. Soyster, Convex programming with set-inclusive constraints and applications to inexact linear programming, Oper. Res. 21, 1154–1157 (1973) [CrossRef] [Google Scholar]

- D. Bertsimas, M. Sim, The price of robustness, Oper. Res. 52, 35–53 (2004) [CrossRef] [MathSciNet] [Google Scholar]

- S. Advani, Linear programming approach to air-Cleaner design, Oper. Res. 22, 295–297 (1974) [CrossRef] [Google Scholar]

- D. Bertsimas, O. Nohadani, Nonconvex robust optimization for problems with constraints, INFORMS J. Comput. 22, 22–58 (2009) [Google Scholar]

- X. Chen, M. Sim, P. Sun, A robust optimization perspective on stochastic programming, Oper. Res. 55, 1058 (2007) [CrossRef] [Google Scholar]

- D.C. Montgomery, Introduction to Statistical Quality Control, edited by N.J. Hoboken (John Wiley & Sons Inc., New York, 2009) [Google Scholar]

- D. Xu, S.L. Albin, Robust optimization of experimentally derived objective functions, IIE Trans. 35, 793–802 (2003) [CrossRef] [Google Scholar]

- L.El. Ghaoui, H. Laurent, Robust solutions to least-squares problems with uncertain data, SIAM J. Matrix Anal. Appl. 18, 1035 (1997) [CrossRef] [Google Scholar]

Cite this article as: Hassan J. Bukhari, Robust design optimization using the price of robustness, robust least squares and regularization methods, Int. J. Simul. Multidisci. Des. Optim. 8, A13 (2017)

All Tables

All Figures

|

Fig. 1 Air cleaner. |

| In the text | |

|

Fig. 2 Objective function variation as a function of Γ. |

| In the text | |

|

Fig. 3 Trade-off between robustness and conservatism. |

| In the text | |

|

Fig. 4 Cantilever beam. |

| In the text | |

|

Fig. 5 Solution of the beam example as a function of Γ. |

| In the text | |

Current usage metrics show cumulative count of Article Views (full-text article views including HTML views, PDF and ePub downloads, according to the available data) and Abstracts Views on Vision4Press platform.

Data correspond to usage on the plateform after 2015. The current usage metrics is available 48-96 hours after online publication and is updated daily on week days.

Initial download of the metrics may take a while.